Accelerate your discoveries

Introducing the AMD Instinct™ MI200 Series:

Tap into the power of the world’s first exascale-class GPU accelerators to expedite time to science and discovery

Leadership HPC

Up to 4.9 times faster than competition

Leadership AI

Up to 20% faster than competition

Leadership Science

Fueling exascale discoveries with ROCm™ open ecosystem

What To Ask When Considering HPC

AMD innovations in supercomputing are taking servers to exascale and beyond. Help your customers select the best HPC and AI accelerator to transform their data center workloads.

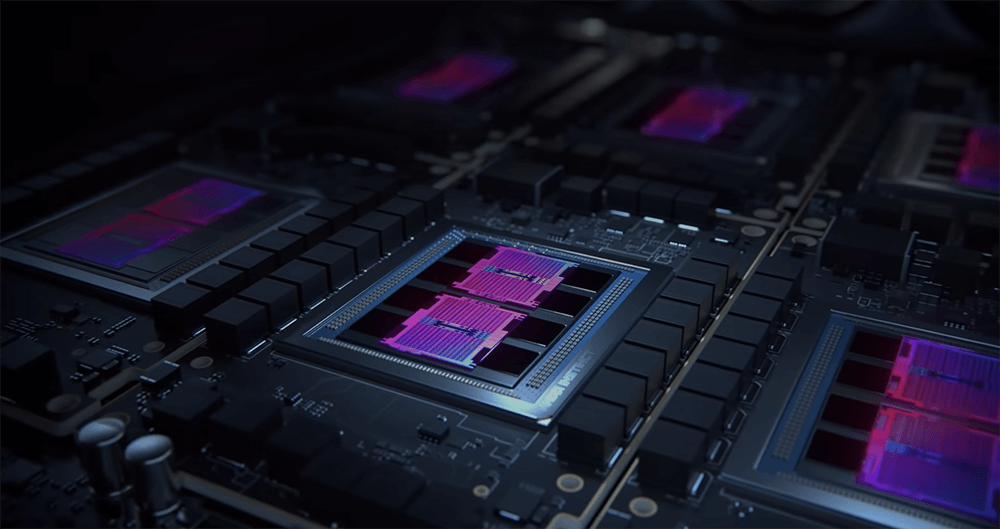

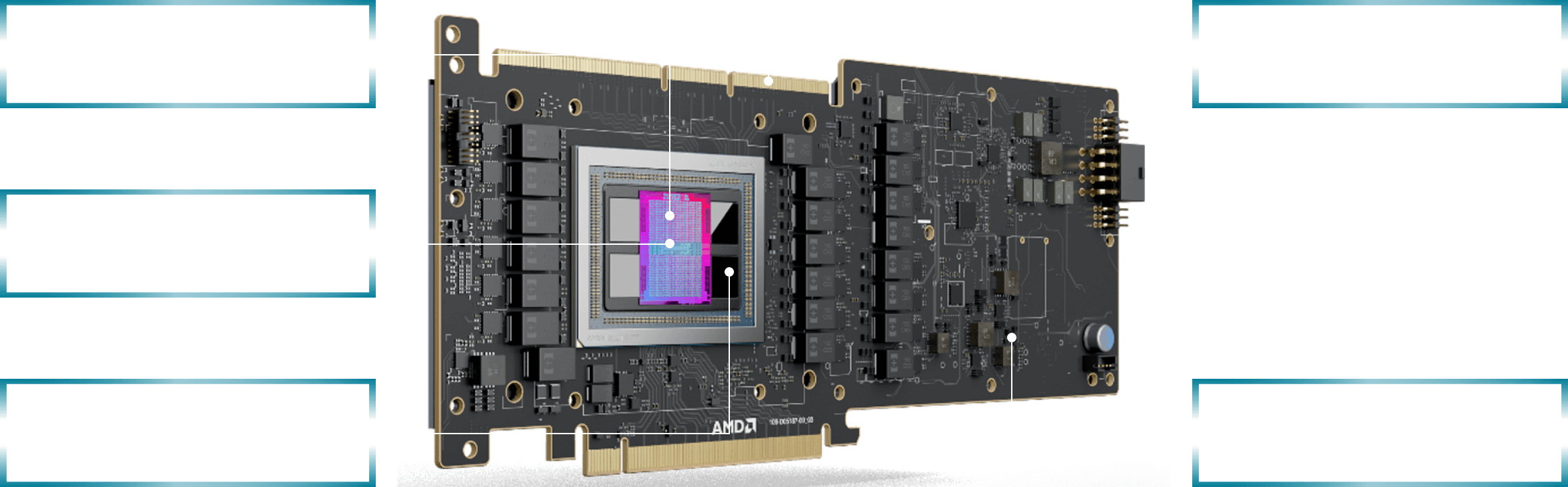

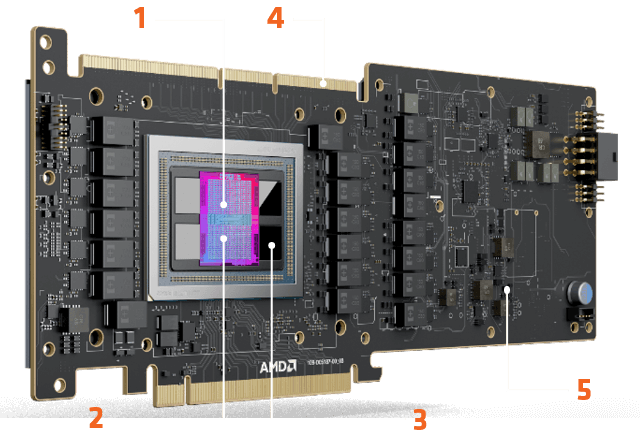

Engineered For The New Era of Digital Computing

Featuring a multi-chip design to maximize performance efficiency and memory throughput, AMD Instinct™ MI200 series accelerators are designed to deliver performance leadership for the data center.

World’s Fastest HPC and AI

Data Center Accelerators1

The AMD Instinct™ MI200 series accelerators are the newest data center GPUs from AMD, designed to power discoveries in exascale systems, enabling scientists to tackle our most pressing challenges from climate change to vaccine research.

AMD Instinct™ MI200 Series

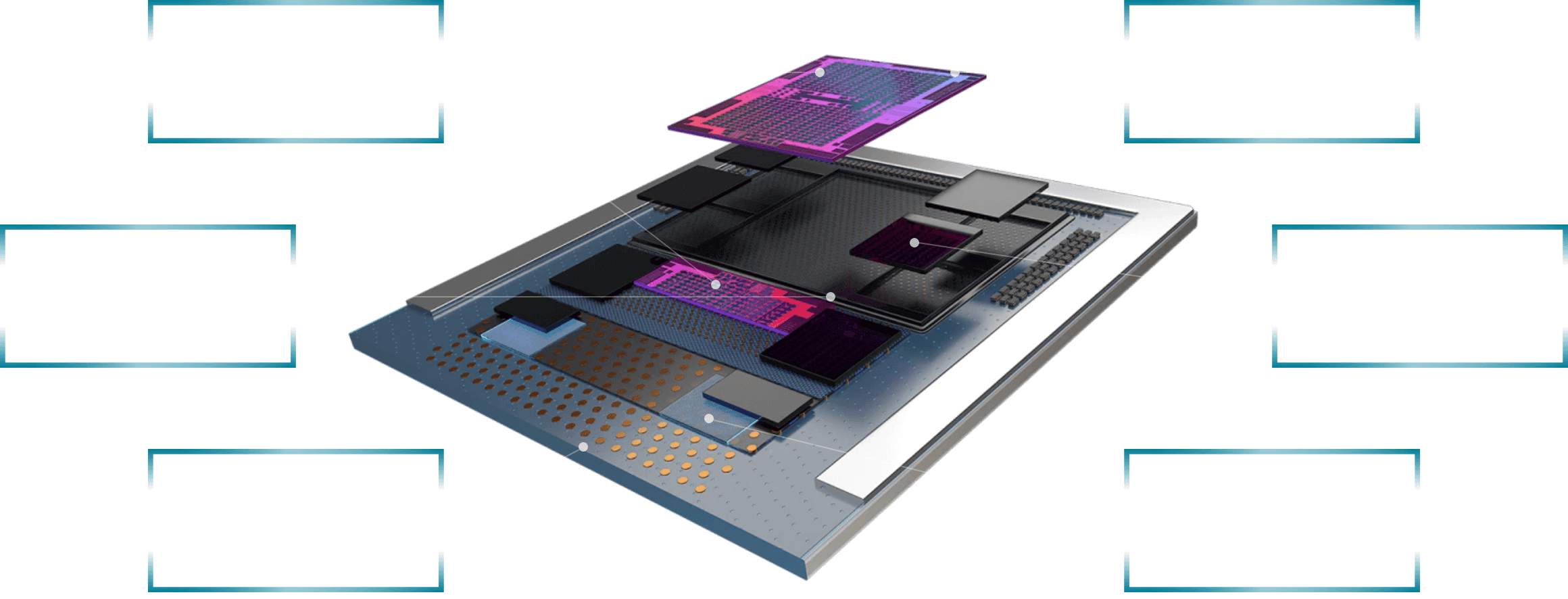

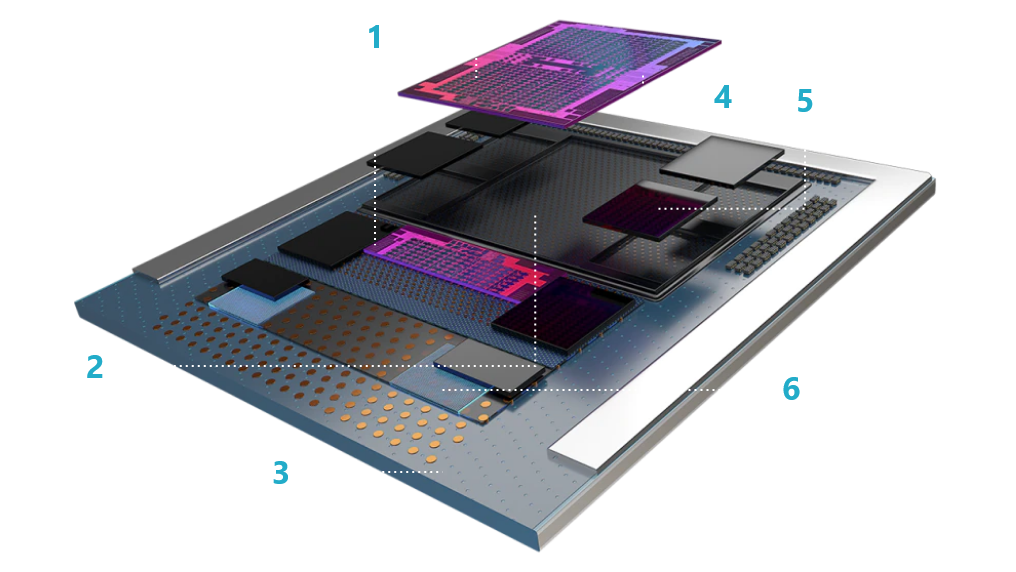

Key Innovations

- Two AMD CDNA™ 2 Dies

- Ultra High Bandwidth Die Interconnect

- Coherent CPU-to-GPU Interconnect

- 2nd Gen Matrix Cores for HPC & AI

- Eight Stacks of HBM2E

- 2.5D Elevated Fanout Bridge (EFB)

AMD Instinct™ MI210 GPU

Exascale technology for MAINSTREAM HPC/AI

Up to 2.3X

Advantage than A100 in

FP64 performance

for HPC

Up to 181TF

FP16/BF16

performance for

AI/ML training

2X

More memory capacity

and 33% more

bandwidth than MI100

AMD Instinct™ MI210 Accelerator

What's New

- AMD CDNA™ 2 GRAPHICS COMPUTE DIES

- 2nd GEN MATRIX CORES

- 64GB HBM2e @ 1.6 TB/s

- 2-WAY & 4-WAY INFINITY FABRIC™ BRIDGES

- SR-IOV SUPPORT FOR VMWARE®

Open, Portable and Flexible

Software Environment

Great hardware needs great software. The open source AMD ROCm™ software environment provides developers with reduced kernel launch latency and faster application performance. With ROCm 5.0, AMD extends its platform powering top HPC and AI applications with AMD Instinct™ MI200 series accelerators. Available resources:

- Ready-to-Deploy software containers and guides on AMD Infinity Hub

- The latest ROCm technical support documents, training materials and tutorials on ROCm™ Learning Center

Expanding support and access

- Support for AMD Radeon™ PRO W6800 Workstation GPUs

- Remote access through the AMD Accelerator Cloud

Optimizing performance

- MI200 Optimizations: FP64 Matrix ops, Improved Cache

- Improved launch latency and kernel performance

Enabling developer success

- HPC Apps & ML Frameworks on AMD Infinity Hub

- Streamlined and improved tools to increase productivity

Discover What To Ask When Considering HPC.

Help your customers select the best HPC and AI accelerator to transform their data center workloads.

Case Studies

KT Cloud

Korean cloud computing company KT Cloud is unleashing the possibilities of AI with AMD Instinct™ MI250 accelerators to build a cost-effective and scalable AI cloud service.

Oak Ridge National

Laboratory - CHOLLA

Step into an alternate universe! Using AMD Instinct™ accelerators, the Cholla CAAR team is simulating the entire Milky Way.

Oak Ridge National

Laboratory - PIConGPU

Leveraging the ROCm platform, ORNL’s PIConGPU taps the immense compute capacity of AMD Instinct™ MI200 accelerators to advance radiation therapy, high-energy physics, and photon science.

Oak Ridge National

Laboratory – CoMet

Learn how AMD Instinct™ MI200 accelerators are enabling the ORNL CoMet team to effectively study biological systems from the molecular level to planetary scale.

1. World’s Fastest HPC and AI Data Center Accelerators¹: World’s fastest data center GPU is the AMD Instinct™ MI250X. Calculations conducted by AMD Performance Labs as of Sep 15, 2021, for the AMD Instinct™ MI250X (128GB HBM2e OAM module) accelerator at 1,700 MHz peak boost engine clock resulted in 95.7 TFLOPS peak theoretical double precision (FP64 Matrix), 47.9 TFLOPS peak theoretical double precision (FP64), 95.7 TFLOPS peak theoretical single precision matrix (FP32 Matrix), 47.9 TFLOPS peak theoretical single precision (FP32), 383.0 TFLOPS peak theoretical half precision (FP16), and 383.0 TFLOPS peak theoretical Bfloat16 format precision (BF16) floating-point performance.