LEADERSHIP PERFORMANCE AT ANY SCALE

From single-server solutions up to the world’s largest, Exascale-class supercomputers¹, AMD Instinct™ accelerators are uniquely well-suited to power even the most demanding AI and HPC workloads in your data centers.

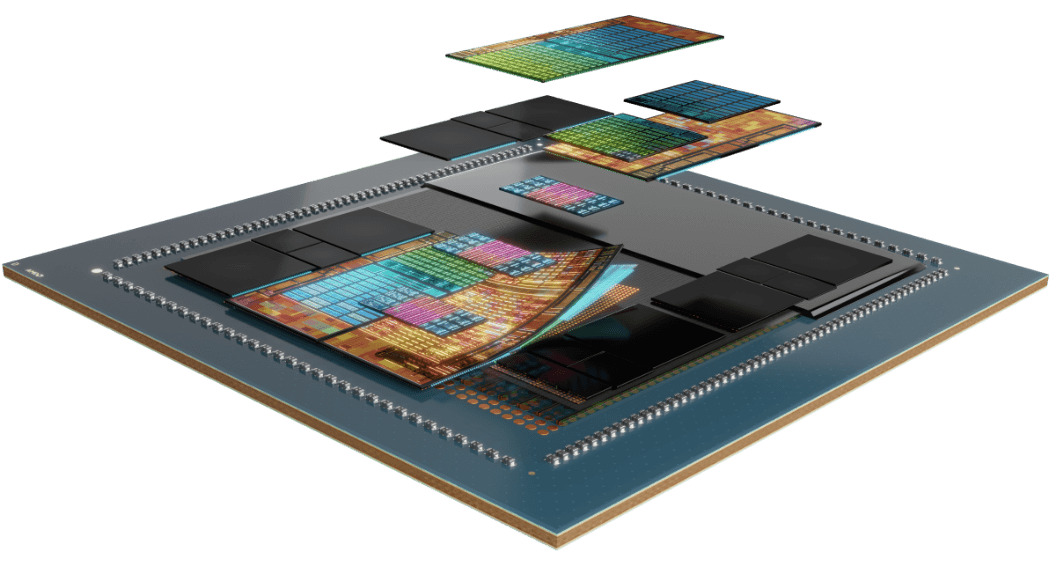

Get more done, more efficiently, with exceptional compute performance, large memory density, high bandwidth memory, and support for specialized data formats.